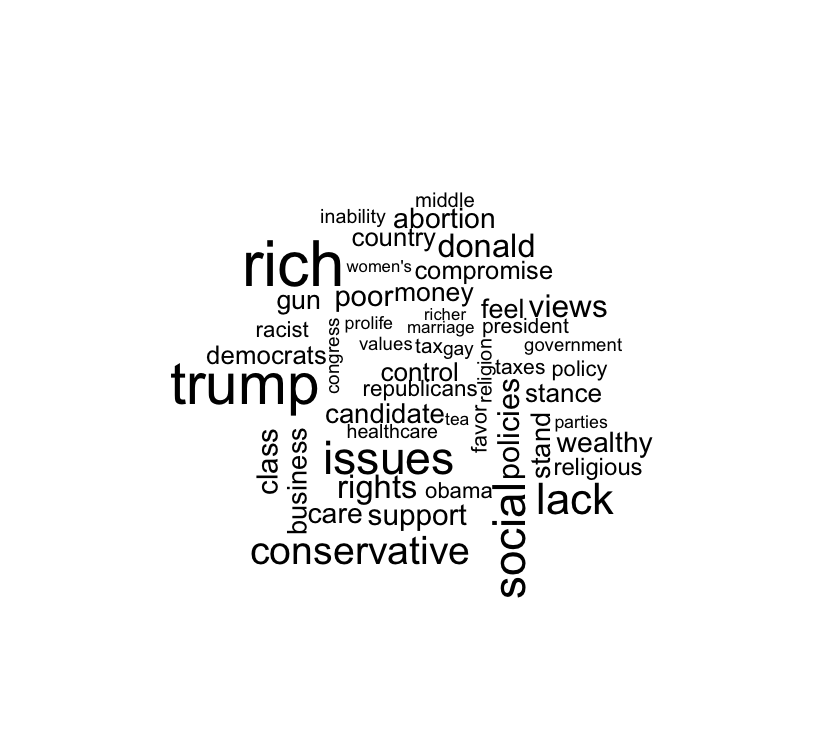

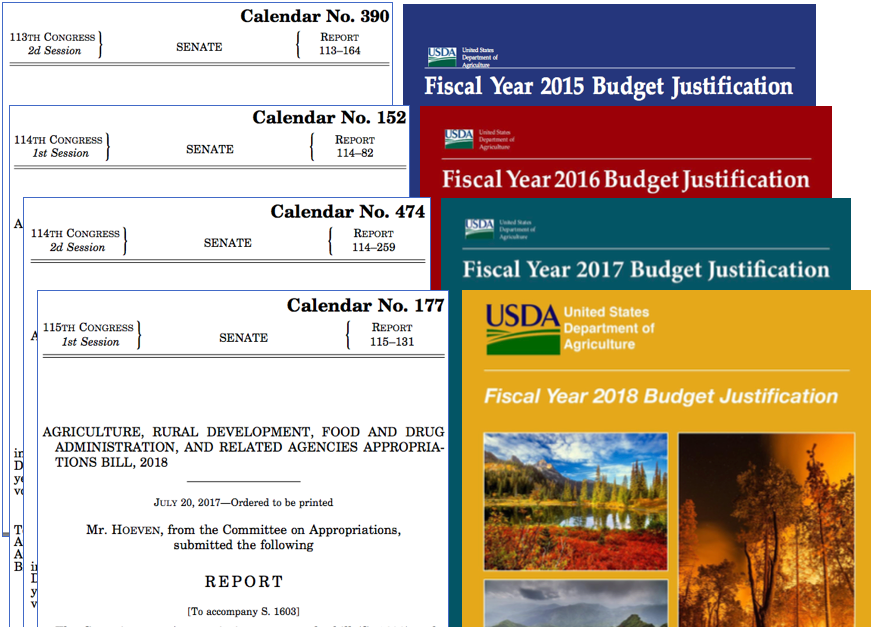

class: center, middle, inverse, title-slide # Tidy text analysis ### Devin Judge-Lord --- ## 1. Counting  -- - text features `\(\in\)`\{all* words, some words, phrases, etc.\} --- ## 1. Counting - text features `\(\in\)`\{all* words, some words, phrases, etc.\} ## 2. Matching  -- - Exactly* the same string ("regular expressions," text reuse) --- ## 1. Counting - text features `\(\in\)`\{all* words, some words, phrases, etc.\} ## 2. Matching - Exactly* the same string ("regular expressions," text reuse) ## 3. Classifying  --- ## 1. Counting - Text features `\(\in\)`\{all* words, some words, phrases, etc.\} ## 2. Matching - Exactly* the same string ("regular expressions," text reuse) - "Fuzzy" matching is exact matching a set of variants ## 3. Classifying - Rules vs. probability - Supervised vs. unsupervised --- ## Resources - [Tidy text class by Andrew Heiss](https://datavizf18.classes.andrewheiss.com/class/11-class/) - [`tidytext`](https://cran.r-project.org/web/packages/tidytext/tidytext.pdf) package - Regular expressions with `stringr`--[cheasheet](http://edrub.in/CheatSheets/cheatSheetStringr.pdf) - [Tidy text analysis](https://www.tidytextmining.com/) including [topic modeling](https://www.tidytextmining.com/topicmodeling.html) and [tidy() for Structural Topic Models](https://juliasilge.github.io/tidytext/reference/stm_tidiers.html) from the `stm` package. More [here](https://rdrr.io/cran/tidytext/man/stm_tidiers.html). ## Reading - [Introduction to cluster analysis](https://eight2late.wordpress.com/2015/07/22/a-gentle-introduction-to-cluster-analysis-using-r/) - Tidy Natural Language Processing with [`cleanNLP`](https://scholarship.richmond.edu/cgi/viewcontent.cgi?article=1195&context=mathcs-faculty-publications) - [Text from audio](https://www.cambridge.org/core/journals/political-analysis/article/testing-the-validity-of-automatic-speech-recognition-for-political-text-analysis/E375085D96331A47E810C01AA6DB0A46) - Here is an [ok blog post on visualizing qualitative data](https://depictdatastudio.com/how-to-visualize-qualitative-data/)--lmk if you find a better resource. --- ## Cool applications: - [Gender tropes in film](https://pudding.cool/2017/08/screen-direction/) - [Analysis of Trump's tweets confirms he writes only the (angrier) Android half](http://varianceexplained.org/r/trump-tweets/) - [Every time Ford and Kavanaugh dodged a question, in one chart](https://www.vox.com/policy-and-politics/2018/9/28/17914308/kavanaugh-ford-question-dodge-hearing-chart) --- class: inverse # 1 Counting things in fancy ways. -- With tidy text, counting words or phrases is simple: - `unnest_tokens()` splits each response into tokens (by word by default, but we can also tokenize by phrases of length n, called n-grams). - [optional] `anti_join(stop_words)` removes words that often have little meaning, like "a" and "the", called stop words. We can also do this with with `filter(!(word %in% stop_words$word))` - `count()` how many times each word appears (`count(word)` is like `group_by(word) %>% summarize(n = n()) %>% ungroup()` ) --- ### Word frequency Responses to ANES question "What do you dislike about [Democrats/Republicans]?" (V161101, V161106) ```r load(here("data/ANESdislikes.Rdata")) d <- ANESdislikes d[1,] ``` ``` ## # A tibble: 1 x 3 ## V160001 question response ## <int> <chr> <chr> ## 1 300001 dislike_about… i see them trying take to much and give to the ho… ``` Tokenize by word ```r words <- unnest_tokens(d, word, response) head(words) ``` ``` ## # A tibble: 6 x 3 ## V160001 question word ## <int> <chr> <chr> ## 1 300001 dislike_about_DEM i ## 2 300001 dislike_about_DEM see ## 3 300001 dislike_about_DEM them ## 4 300001 dislike_about_DEM trying ## 5 300001 dislike_about_DEM take ## 6 300001 dislike_about_DEM to ``` --- ### Replace meaningless words ```r # 1. anti-join() a data frame with a column of unwanted words called 'word' words %<>% anti_join(stop_words) # 2. Filter out strings we don't want separated with "|" (RegEx "or") unwanted_words <- "people|just|dont|like|about|democrat.|republican.|party|[0-9]" words %<>% filter(!str_detect(word, unwanted_words) ) ``` ### Count ```r ## Count the number of times each word occurs in each group words %<>% group_by(question) %>% count(word) head(words) ``` ``` ## # A tibble: 6 x 3 ## # Groups: question [1] ## question word n ## <chr> <chr> <int> ## 1 dislike_about_DEM abandoning 1 ## 2 dislike_about_DEM abaout 1 ## 3 dislike_about_DEM abe 1 ## 4 dislike_about_DEM abilities 1 ## 5 dislike_about_DEM ability 5 ## 6 dislike_about_DEM abomination 1 ``` --- ```r top_n(words, 10) %>% ## Top 10 words in each group ggplot( aes(x = reorder(word, n), y = n) ) + geom_col() + coord_flip() + facet_wrap("question", scales = "free_y", strip.position="top") + labs(x = "Word", y = "Count") ``` <img src="Figs/ANESfrequency-1.png" style="display: block; margin: auto;" /> --- ```r words %>% filter(question == "dislike_about_GOP") %>% with(wordcloud(word, n, max.words = 50)) ```  --- Word clouds only show word frequency, and font size is hard to compare visually. Nevertheless, [they may be useful](https://www.vis4.net/blog/2015/01/when-its-ok-to-use-word-clouds/) if all you care about is frequency. --- Tokenize by word pair ("bi-gram") ```r ## Name the new column d$bigrams ("ngrams" where n = 2) bigrams <- unnest_tokens(d, bigram, response, token = "ngrams", n = 2) head(bigrams) ``` ``` ## # A tibble: 6 x 3 ## V160001 question bigram ## <int> <chr> <chr> ## 1 300001 dislike_about_DEM i see ## 2 300001 dislike_about_DEM see them ## 3 300001 dislike_about_DEM them trying ## 4 300001 dislike_about_DEM trying take ## 5 300001 dislike_about_DEM take to ## 6 300001 dislike_about_DEM to much ``` ```r ## Count the number of times each bigram occurs in each group bigrams %<>% group_by(question) %>% count(bigram) ``` --- ```r ## Top 10 bigrams in each group top_n(bigrams, 10) %>% ggplot( aes(x = reorder(bigram, n), y = n) ) + geom_col() + coord_flip() + facet_wrap("question", scales = "free_y", strip.position="top") + labs(x = "Bigram", y = "Count") ``` <img src="Figs/ANESfrequency-b-1.png" style="display: block; margin: auto;" /> --- class: inverse # 2 Matching ## Global alignment (more counting than matching) Used in: overall document similarity ## Local alignment - Exact (`==`) - Patterns (Regular expressions, `stringr`) - Fuzzy patterns Used in: text-reuse/plagiarism, web scraping, data cleaning --- ## Resources Matching usually involve doing the same thing lots of times, so we use [functions](https://nicercode.github.io/guides/functions/) - [`purrr`](https://www.rstudio.com/resources/cheatsheets/#purrr) offers tools to apply functions to data (instead of writing loops where we have to index everything) Web scraping also requires manipulating HTML. - [Khan Academy intro to HTML.](https://www.khanacademy.org/computing/computer-programming/html-css/intro-to-html/pt/html-basics) - `rvest` offers tools for web scraping. Here is a [tutorial on Data Camp](https://www.datacamp.com/community/tutorials/r-web-scraping-rvest0) Once we have scraped text, we need to clean it and extract the things we care about, both task for [RegEx]( https://www.rstudio.com/wp-content/uploads/2016/09/RegExCheatsheet.pdf) - For tools to manipulate text, see the [stringr cheatsheet](https://www.rstudio.com/resources/cheatsheets/#stringr) - Matt Denny has a [nice tutorial on cleaning text](http://www.mjdenny.com/Text_Processing_In_R.html) --- ## Global alignment How similar are pairs of texts are overall (i.e., statistically) 1. Count things, usually word frequencies. - Recall the [congressional letters example](https://judgelord.github.io/PS811/text-legislator-letters.html) - The result is a high-dimension object. (If each word is a variable, we may have thousands of variables!) 2. Compare distributions - Cosign similarity scores (pairwise similarity score) - Dimensional scaling (reduce variation to one or two spatial dimensions) --- <img src="Figs/seuss.jpg" width="500px" style="display: block; margin: auto;" /><img src="Figs/samedifferent.jpg" width="500px" style="display: block; margin: auto;" /> --- ## Local alignment   --- #### Exact (`==`) vs. patterns (`str_detect("regular expression")`) ```r strings <- c("Elmo", "Zoe", "Thing 1", "Thing 2", "are your politics more like Sesame Street or Dr Seuss?") strings == "Elmo" # Exact match? ``` ``` ## [1] TRUE FALSE FALSE FALSE FALSE ``` ```r strings == "Thing [0-9]" # Exact match? ``` ``` ## [1] FALSE FALSE FALSE FALSE FALSE ``` ```r str_detect(strings, "Thing [0-9]") # RegEx match? ``` ``` ## [1] FALSE FALSE TRUE TRUE FALSE ``` ```r str_extract_all(strings, "[A-Z][a-z]*", simplify = T) # stringr functions: str_.... ``` ``` ## [,1] [,2] [,3] [,4] ## [1,] "Elmo" "" "" "" ## [2,] "Zoe" "" "" "" ## [3,] "Thing" "" "" "" ## [4,] "Thing" "" "" "" ## [5,] "Sesame" "Street" "Dr" "Seuss" ``` --- <img src="Figs/regex-animal.jpg" width="500px" style="display: block; margin: auto;" /> --- # Web scraping `rvest`: `read_html()`, or `rvest` + `httr`: `read_html( httr::GET() )` 99% of web scraping is finding the information we want and wrangling it into a data frame. ```r html <- read_html("https://www.UN.org/") # The UN homepage links <- html_nodes(html, "a") # "a" nodes are linked text html_text(links)[2:7] # The text for links 2-7 ``` ``` ## [1] "国际提高地雷意识和协助地雷行动日-4月4日" ## [2] "International Mine Awareness Day - 4 April" ## [3] "Journée internationale pour la sensibilisation au problème des mines et l’assistance à la lutte antimines - 4 avril" ## [4] "Международный день просвещения по вопросам минной опасности и помощи в деятельности, связанной с разминированием — 4 апреля" ## [5] "Día Internacional de información sobre el peligro de las minas y de asistencia para las actividades relativas a las minas, 4 de abril" ## [6] "اليوم الدولي للتوعية بخطر الألغام\r\n\r\n4 نيسان/أبريل\r\n" ``` [Example: A list of companies lobbying the U.S. Federal Energy Regulatory Commission](https://judgelord.github.io/correspondence/functions/DOE_FERC-company-scraper.html) [Example: A table including the text of scanned pdf letters from Members of Congress](https://judgelord.github.io/correspondence/functions/DOE_FERC-scraper.html) --- class: inverse center middle ## Text-reuse/plagerism --- ## Measuring similarity: - Percent copied words, n-grams, sentences - Percent aligned - Global alignment (word frequencies) or relative topic proportions ## Measuring change across versions: - Percent new words, n-grams, sentences - Percent unaligned - Change in global alignment (word frequencies) or topic proportions --- ### For example, changes in budget text and committee reports [[see more]()]:  --- ### When similar words mean different things, edits matter: 2010: `"CLIMATE CHANGE The Committee continues to support the Administration's efforts to address climate change. Funding for its voluntary climate change programs are continued through this bill."` 2012: `"CLIMATE CHANGE This Committee remains skeptical of the Administration's efforts to re-package existing programs and to fund new ones in the name of climate change."` --- ### Edits may introduce new ideas in otherwise aligned texts. The Smith-Waterman local alignment algorithm: 2017: `"FDA is implementing the Food Safety Modernization Act FSMA `and the XXXXXXXXX motivation XXXXXX for XXXXX the generic drug labeling rule `and regulation of tobacco products"` 2018: `"FDA is implementing the Food Safety Modernization Act FSMA `XXX XXX addressing XXXXXXXXX opioid XXX abuse XXX XXXXXXX XXXX XXXXXXXX XXXX `and regulation of tobacco products"` --- ## [Smith-Waterman](http://fridolin-linder.com/resources/2016/03/30/local-alignment.html) Tracing a path through the matrix of the two documents, maximizing matches (technically, points for sequences of matches and mismatches). <img src="Figs/smith-waterman.png" width="500px" style="display: block; margin: auto;" /> --- ## N-gram window Finding all exact matches of n words or more. With a 4-gram window: 2010: "`CLIMATE CHANGE The Committee` continues to support `the Administration's efforts to` address climate change. Funding for its voluntary climate change programs are continued through this bill."` 2012: "`CLIMATE CHANGE This Committee` remains skeptical of `the Administration's efforts to` re-package existing programs and to fund new ones in the name of climate change."` From this example: [Change and influence in budget texts]() Another example: [Public comments on proposed regulations]() ---